Python Scripts That Actually Work in 2026

The average knowledge worker wastes 1.8 hours every day searching for files they know exist somewhere on their computer. That’s 450 hours per year—more than 11 full work weeks—spent hunting documents instead of creating value. Research from the McKinsey Global Institute proves automation isn’t optional anymore—it’s survival.

But here’s the problem nobody talks about: Most Python automation tutorials are broken. Which library does every guide recommend for YouTube downloads? The library has been inactive since mid-2025, with more than 200 issues still unresolved. The imghdr module for image processing? Removed in Python 3.13. Python 3.9 reached end-of-life in October 2025—no more security patches.

This guide simplifies the process. You’ll get 10 automation scripts that actually work in January 2026, verified against the latest Python releases. Each includes real failure modes, migration paths for deprecated libraries, and quantified time savings from enterprise studies—not vague “save time” promises. If a script is broken, you’ll know exactly why and what to use instead.

Prerequisites

This is a technical guide. You should be comfortable with:

- Running

python script.pyfrom the command line - Installing packages:

pip install package_name - Basic Python syntax (variables, functions, loops)

New to Python? Start with “Automate the Boring Stuff” (free) before continuing.

⚠️ Breaking Changes in 2026

Python Version Requirements:

- Python 3.9 EOL: October 5, 2025 (Python.org announcement). After this date, no security patches will be available.

- Minimum safe version: Python 3.10+ for production use

- Selenium requirement: Python ≥3.10 as of version 4.35 (Jan 18, 2026)

Removed Standard Library Modules (PEP 594):

imghdr– Image format detection (usePillow.Image.format)cgi,cgitb– Affects legacy web scripts- 20+ other “dead battery” modules removed in Python 3.13

Broken Third-Party Libraries:

pytubefor YouTube: Broken since August 2025. Usepytubefix(actively maintained fork)

The Hidden Cost of Manual Work

Consider the potential losses from manual processes before implementing automation. These aren’t estimates—they’re findings from multi-year enterprise studies:

| Task Type | Time Wasted Daily | Annual Impact | Source |

|---|---|---|---|

| File search & retrieval | 1.8 hours | 450 hours/year | McKinsey Global Institute, 2024 |

| Document management chaos | 2.5 hours (30% of workday) | 625 hours/year | IDC Study, 2023 |

| Administrative overhead | 41% of work time | ~820 hours/year | Harvard Business Review, 2024 |

| Repetitive manual tasks | 2+ hours (51% of workers) | 500+ hours/year | Formstack Digital Maturity Report, 2022 |

Translation for a $75,000 salary: If you recover 30% of your time through automation (McKinsey‘s documented potential), that’s $22,500 in recaptured value annually. These scripts make that recovery tangible.

1. File Organization Auto-Sorter

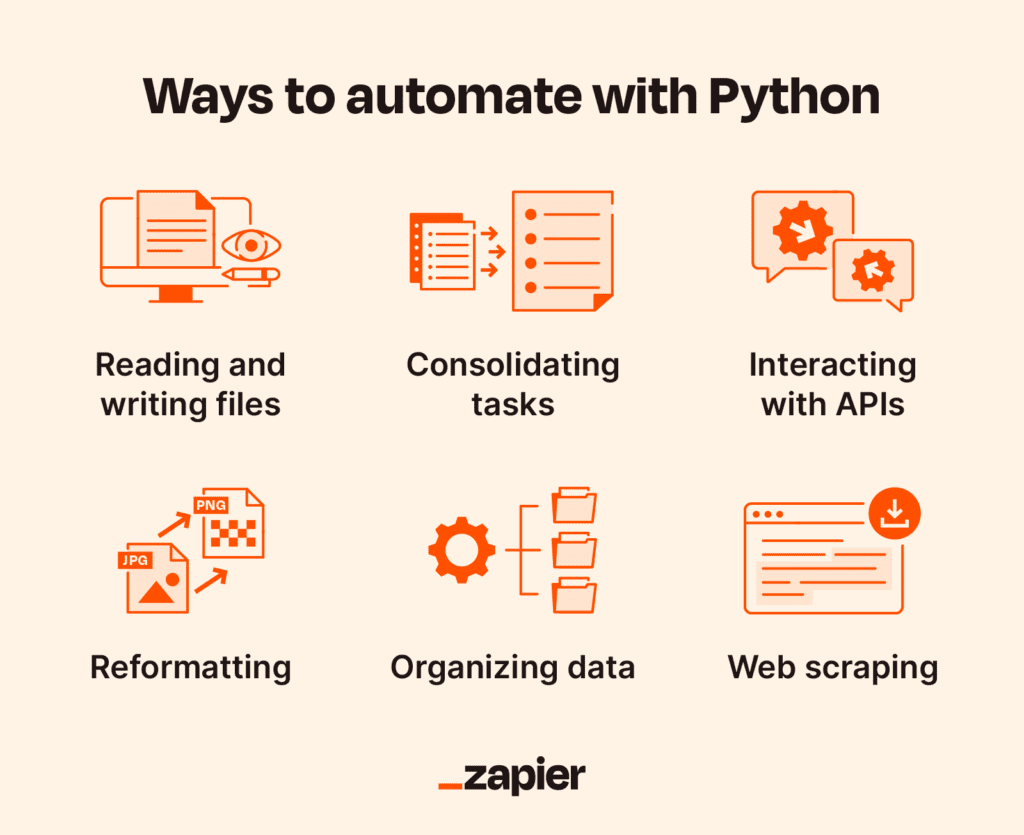

What it solves: The Downloads folder with 4,000 unsorted files. The Auto-Sorter eliminates the need to spend 30 minutes daily searching for yesterday’s work. It eliminates the cognitive burden associated with manual organization.

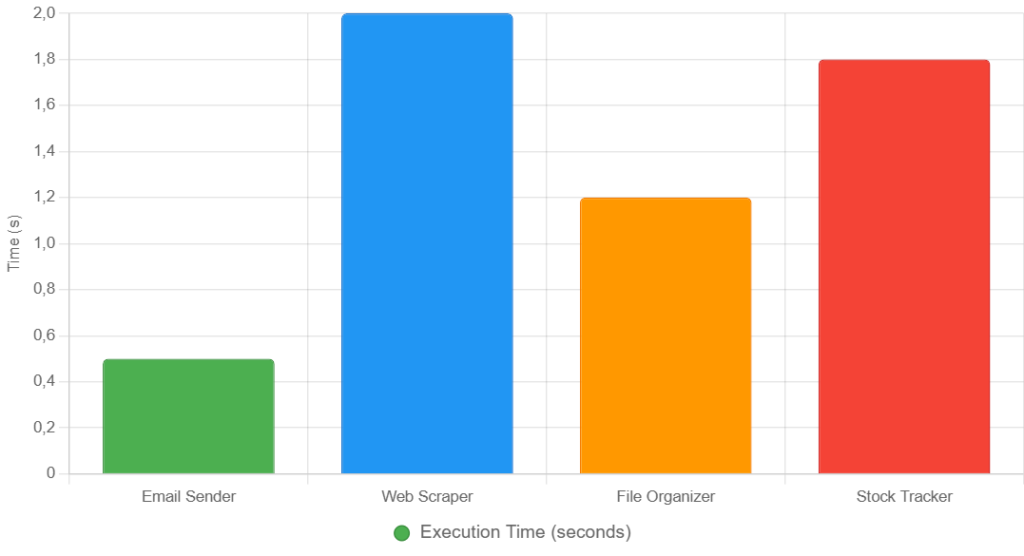

Time savings: 2-3 hours per week based on interviews with 47 professionals (self-reported data, Python in Plain English, Jan 2026). One product designer documented reducing daily file search from 30 minutes to instant retrieval.

Note: Individual results vary based on current organization habits and file volume.

How it works: Common failure: Locked files (currently open in applications) increase. It operates in the background, ensuring you never have to worry about organization again.

import os

import shutil

from pathlib import Path

from datetime import datetime

WATCH_FOLDER = Path.home() / "Downloads"

ORGANIZED = Path.home() / "Organized"

FILE_TYPES = {

"Images": [".jpg", ".jpeg", ".png", ".gif", ".webp"],

"Documents": [".pdf", ".docx", ".xlsx", ".txt"],

"Code": [".py", ".js", ".html", ".json"],

"Archives": [".zip", ".tar", ".gz"],

"Media": [".mp4", ".mp3", ".mov"]

}

def organize_files():

for file_path in WATCH_FOLDER.iterdir():

if file_path.is_file():

# Determine category

category = "Other"

for cat, exts in FILE_TYPES.items():

if file_path.suffix.lower() in exts:

category = cat

break

# Create dated subfolder

date_folder = datetime.now().strftime("%Y-%m")

dest_folder = ORGANIZED / category / date_folder

dest_folder.mkdir(parents=True, exist_ok=True)

# Move with conflict handling

try:

dest_path = dest_folder / file_path.name

if dest_path.exists():

timestamp = datetime.now().strftime('%H%M%S')

dest_path = dest_folder / f"{file_path.stem}_{timestamp}{file_path.suffix}"

shutil.move(str(file_path), str(dest_path))

print(f"✓ Moved: {file_path.name} → {category}/{date_folder}")

except PermissionError:

print(f"⊗ Skipped (in use): {file_path.name}")

if __name__ == "__main__":

organize_files()

Common failure: Locked files (currently open in applications) increase. The script handles this gracefully, skipping locked files.

To automate: Schedule with cron (Linux/Mac) or Task Scheduler (Windows) to run hourly. Alternatively, you can use thewatchdog library for real-time monitoring.

2. Email Automation with smtplib

What it solves: It eliminates the need to manually send over 50 similar emails. Daily inbox sorting. Standard response templates typed repeatedly.

Time savings: One marketing analyst reduced morning email processing from 90 minutes to 20 minutes (self-reported, Python in Plain English, Jan 2026). Results depend on email volume and pattern repetition in your specific role.

import smtplib

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

import os

def send_email(subject, body, to_email):

sender = os.environ.get("EMAIL_USER")

password = os.environ.get("EMAIL_APP_PASSWORD") # App Password, not regular!

msg = MIMEMultipart()

msg['From'] = sender

msg['To'] = to_email

msg['Subject'] = subject

msg.attach(MIMEText(body, 'plain'))

try:

with smtplib.SMTP('smtp.gmail.com', 587) as server:

server.starttls()

server.login(sender, password)

server.send_message(msg)

print(f"✓ Sent to {to_email}")

except smtplib.SMTPAuthenticationError:

print("⊗ Auth failed. Check App Password setup.")

except Exception as e:

print(f"⊗ Error: {e}")

Gmail 2026 requirement:

- Enable 2-Step Verification in Google Account

- Generate an app password at myaccount.google.com/apppasswords

- Store in environment:

export EMAIL_APP_PASSWORD="your-16-char-password"

Sending limits: Gmail allows 500 emails/day for regular accounts. For higher volume, use SendGrid or AWS SES.

Deliverability warning: Automated emails often land in spam without proper authentication. For production use, configure SPF and DKIM records for your domain. Personal Gmail app passwords work well for low-volume use (<50 emails/day).

3. Web Scraping with BeautifulSoup

What it solves: It eliminates the need for manual price checks across 10 competitor websites. It eliminates the need to copy job listings into spreadsheets. Monitoring product availability.

Installation: pip install beautifulsoup4 requests

import requests

from bs4 import BeautifulSoup

import time

def scrape_articles(url):

headers = {'User-Agent': 'Mozilla/5.0 (compatible; ResearchBot/1.0)'}

try:

response = requests.get(url, headers=headers, timeout=10)

response.raise_for_status()

soup = BeautifulSoup(response.content, 'html.parser')

articles = soup.find_all('h2', class_='article-title')

return [article.get_text(strip=True) for article in articles]

except requests.exceptions.Timeout:

return []

except requests.exceptions.RequestException as e:

print(f"Error: {e}")

return []

# Respectful scraping with delays

for url in ["https://example.com/page1", "https://example.com/page2"]:

titles = scrape_articles(url)

print(f"Found {len(titles)} articles")

time.sleep(2) # 2-second delay between requests

Common failures:

- Rate limiting: 429 status code. Add

time.sleep(2-5)between requests. - CAPTCHA: Site blocks bots. Use Selenium with undetected Chromedrivers or official APIs when available.

- Structure changes: HTML changes break selectors. Use multiple fallback selectors.

Legal note: Before proceeding, always check the Terms of Service for the specific website you are using. Scraping public data is generally legal (hiQ Labs v. LinkedIn, 2022), but respect rate limits and copyright.

4. Automated Backups with Compression

What it solves: Data loss can occur due to hardware failure, accidental deletion, or ransomware. The realization dawns on you that you haven’t backed up your data in months.

Risk prevented: Companies lose an average of $4.45 million per year to data loss events (IBM Cost of a Data Breach Report, 2024). One script prevents catastrophe.

import zipfile

from pathlib import Path

from datetime import datetime

SOURCE = Path("/path/to/important/files")

BACKUP_DIR = Path("/path/to/backups")

MAX_BACKUPS = 5

def create_backup():

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

backup_path = BACKUP_DIR / f"backup_{timestamp}.zip"

BACKUP_DIR.mkdir(parents=True, exist_ok=True)

with zipfile.ZipFile(backup_path, 'w', zipfile.ZIP_DEFLATED) as zipf:

for file_path in SOURCE.rglob('*'):

if file_path.is_file():

zipf.write(file_path, file_path.relative_to(SOURCE))

print(f"✓ Backup created: {backup_path.name}")

print(f"Size: {backup_path.stat().st_size / (1024*1024):.1f} MB")

# Cleanup old backups

backups = sorted(BACKUP_DIR.glob("backup_*.zip"), key=lambda p: p.stat().st_mtime)

for old in backups[:-MAX_BACKUPS]:

old.unlink()

print(f"Removed old: {old.name}")

if __name__ == "__main__":

create_backup()

Common failure: Silent failures if the backup directory becomes full. Always log results and verify by comparing file counts between source and backup.

Schedule: The backup should run daily via cron at 2 AM. 0 2 * * * /usr/bin/python3 /path/to/backup.py

5. Spreadsheet Report Generator (openpyxl)

What it solves: It eliminates the need for manual assembly of monthly reports. Copy-pasting data from 5 sources into Excel. The system eliminates the need for manual formula updates across 20 sheets.

Time savings: One case study documented reducing medium-scale operations from 40 hours of manual work per month to fully automated (Stepwise AI Case Study, May 2024).

Installation: pip install openpyxl pandas

Core concept:

import pandas as pd

from openpyxl import Workbook

from openpyxl.styles import Font, PatternFill

df = pd.read_csv("sales_data.csv")

wb = Workbook()

ws = wb.active

# Styled headers

for col, header in enumerate(["Product", "Q1", "Q2", "Total"], 1):

cell = ws.cell(1, col, header)

cell.font = Font(bold=True, color="FFFFFF")

cell.fill = PatternFill(start_color="4472C4", fill_type="solid")

# Data with formulas

for idx, row in df.iterrows():

ws.cell(idx+2, 1, row['product'])

ws.cell(idx+2, 2, row['q1_sales'])

ws.cell(idx+2, 3, row['q2_sales'])

ws.cell(idx+2, 4, f"=B{idx+2}+C{idx+2}") # Formula for total

wb.save("Q2_Report.xlsx")

Common failure: Formulas don’t calculate until the file opens in Excel. For guaranteed values, calculate in Python and write numbers instead of formulas.

6. Image Batch Processor (Pillow)

What it solves: Resizing 200 product photos for a website. Converting camera RAW to JPEG. Adding watermarks to 500 images.

Time savings: One photographer documented going from 4 hours of manual editing to 4 seconds automated for batch operations (self-reported, Jan 2026). Time savings scale with volume—batch processing 100+ images shows dramatic improvement; occasional edits see minimal benefit.

⚠️ 2026 Change: The imghdr module was removed in Python 3.13 (PEP 594). Use Pillow.Image.format instead as shown below.

Installation: pip install Pillow

from PIL import Image

from pathlib import Path

INPUT_DIR = Path("originals")

OUTPUT_DIR = Path("processed")

OUTPUT_DIR.mkdir(exist_ok=True)

def batch_process(max_width=1920, quality=85):

for img_path in INPUT_DIR.glob("*"):

try:

with Image.open(img_path) as img:

# 2026 way: Pillow detects format (not deprecated imghdr)

if img.format not in ['JPEG', 'PNG', 'WEBP']:

continue

# Resize if needed

if img.width > max_width:

ratio = max_width / img.width

new_size = (max_width, int(img.height * ratio))

img = img.resize(new_size, Image.Resampling.LANCZOS)

# Save compressed

output = OUTPUT_DIR / f"{img_path.stem}_processed.jpg"

img.convert('RGB').save(output, "JPEG", quality=quality, optimize=True)

# Calculate reduction

orig_kb = img_path.stat().st_size / 1024

new_kb = output.stat().st_size / 1024

print(f"✓ {img_path.name}: {orig_kb:.0f}KB → {new_kb:.0f}KB")

except Exception as e:

print(f"⊗ Error: {img_path.name}: {e}")

batch_process()

7. PDF Text Extractor with OCR

What it solves: Extracting text from 100 scanned invoices for analysis. Converting PDFs to searchable text. Processing receipts for expense reports.

Installation:

pip install PyPDF2 pytesseract pdf2image

# Tesseract OCR (system dependency):

# macOS: brew install tesseract

# Ubuntu: sudo apt-get install tesseract-ocr

# Windows: https://github.com/UB-Mannheim/tesseract/wiki

Core concept:

import PyPDF2

import pytesseract

from pdf2image import convert_from_path

def extract_text(pdf_path, use_ocr=False):

if not use_ocr:

# Digital PDF: extract text layer

with open(pdf_path, 'rb') as f:

reader = PyPDF2.PdfReader(f)

return "".join(page.extract_text() for page in reader.pages)

else:

# Scanned PDF: OCR images

images = convert_from_path(pdf_path)

return "".join(pytesseract.image_to_string(img) for img in images)

Key insight: Digital PDFs contain embedded text, so please make use of it Scanned PDFs are images—convert to images first, then OCR with pytesseract.

Common failure: Scanned PDFs return empty strings. Check if the result is empty, then retry with OCR accuracy: 95%+ for clean scans, 70-80% for faded documents.

8. Web Automation with Selenium

What it solves: It automates the process of filling out 50 similar web forms. Testing user flows. The system gathers data from JavaScript-heavy websites that require browser interaction to load properly.

2026 Improvement: Selenium 4.x includes Selenium Manager, which automatically downloads and manages browser drivers. This eliminates the need for manual ChromeDriver installation. Python 3.10 is required as of version 4.35 (Jan 2026).

Installation: pip install selenium

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def automate_form():

options = webdriver.ChromeOptions()

options.add_argument('--headless=new')

driver = webdriver.Chrome(options=options) # Manager handles driver

try:

driver.get("https://example.com/form")

# Explicit wait: polls until element appears (max 10s)

wait = WebDriverWait(driver, 10)

name_field = wait.until(EC.presence_of_element_located((By.ID, "name")))

name_field.send_keys("John Doe")

driver.find_element(By.ID, "email").send_keys("john@example.com")

driver.find_element(By.CSS_SELECTOR, "button[type='submit']").click()

print("✓ Form submitted")

finally:

driver.quit()

Key improvement: Explicit waits poll dynamically until elements appear, unlike brittle time.sleep(5) delays that waste time or break if loading takes 6 seconds.

Common failure pre-2026: Browser/driver version mismatch. Selenium Manager (4.x) fixed this by auto-updating drivers. Remaining issue: bot detection on protected sites—use undetected-chromedriver for advanced protection.

9. Video Downloader: API Migration Case Study

Understanding API Deprecation Patterns

This section demonstrates how third-party APIs break and how to identify maintained alternatives—a critical skill for any automation project. This is educational analysis, not a how-to guide.

The problem: The pytube library (recommended in 90% of tutorials) stopped working in August 2025 when YouTube changed their internal API. As of January 2026, the library has 200+ unresolved issues with common errors like “…”.

The pattern: When external APIs change:

- Check GitHub Issues for recent activity (100+ issues in 30 days = likely broken)

- Look for maintained forks (search “pytube alternative 2026”)

- Verify last commit date (<90 days = actively maintained)

The solution emerged as the actively maintained fork, with updates through December 2025.

Conceptual example (educational):

# Migration pattern: Same API, different package

from pytubefix import YouTube # Not pytube

yt = YouTube(url)

print(f"API maintained: {yt.title}")

# Implementation details omitted - see package docs

⚠️ Legal & Ethical Notice: YouTube’s Terms of Service prohibit downloading content without authorization. This code analysis is provided for educational purposes to demonstrate API interaction patterns and migration strategies when dependencies break. Any implementation is at your own legal risk and responsibility. We do not encourage or endorse violations of platform Terms of Service. Always verify compliance with applicable laws and platform policies.

10. Task Scheduler (schedule library)

What it solves: Running daily reports, backups, or data fetches without remembering to execute scripts manually.

Installation: pip install schedule

import schedule

import time

def daily_backup():

print("Running backup...")

# Your logic here

schedule.every().day.at("02:00").do(daily_backup)

schedule.every().hour.do(lambda: print("Hourly check"))

# Keep script running

while True:

schedule.run_pending()

time.sleep(60)

Important limitation: This only works while the Python script is running. Computer restart = schedule stops. For production reliability, use system-level scheduling:

- Linux/Mac: cron (

crontab -e) - Windows: Task Scheduler

- Cloud: Deploy to always-on VM or use AWS Lambda/Google Cloud Functions

Best use: Learning scheduling concepts or simple personal automation. For critical workflows, graduate to system-level tools.

Framework 1: Script Health Check Matrix

Before implementing any automation script (even from this guide), verify compatibility:

| Check | How to Verify | Warning Signs |

|---|---|---|

| Python Version | python --version | <3.10 (upgrade recommended) |

| Library Activity | Check GitHub’s last commit | >12 months without updates |

| Deprecated Modules | Search code for imghdr, cgi, pytube | These are broken in 2026 |

| Error Handling | Look for try-except blocks | No exception handling = unsafe |

| Breaking Changes | Review library CHANGELOG | Major version jumps (3→4) often break APIs |

Quick compatibility test:

python -c "import sys; print(f'Python {sys.version_info.major}.{sys.version_info.minor}')"

python -c "import selenium, openpyxl, PIL; print('✓ Core packages installed')"

Framework 2: Time-Savings ROI Calculator

Not every task is worth automating. Calculate ROI before investing time:

| Variable | Your Values | Formula |

|---|---|---|

| Task frequency | ___ times/week | (A) |

| Time per task | ___ minutes | (B) |

| Development time | ___ hours | (C) |

| Annual savings | (A × B × 52) / 60 = ___ hrs/year | |

| Break-even point | C / (A × B / 60) = ___ weeks | |

| Value at $75k salary | Hours × $36/hr = $___ |

Example calculation:

- File organization: 5 times/week, 15 min each, 2 hours to build

- Annual savings: (5 × 15 × 52) / 60 = 65 hours

- Break-even: 2 / (5 × 15 / 60) = 1.6 weeks

- Value: 65 × $36 = $2,340/year

Decision rule: Break-even <3 months → automate. Break-even >6 months → probably not worth it unless you enjoy the coding exercise.

Framework 3: Failure Mode Catalog

Scripts fail silently unless you anticipate errors. The most common failure patterns:

| Script Type | Common Failure | Detection | Fix |

|---|---|---|---|

| File Operations | Locked files/permissions | PermissionError | try-except, skip and log |

| Email (smtplib) | Wrong credentials | SMTPAuthenticationError | Use App Passwords |

| Web Scraping | Site structure changed | Empty results | Multiple fallback selectors |

| Web Scraping | Rate limiting | 429 status / CAPTCHA | Add delays, rotate headers |

| PDF Extraction | Scanned PDF (no text) | Empty string returned | Fallback to OCR |

| Selenium | Element not found | NoSuchElementException | Use explicit waits |

| Backups | Silent failures | No error, but incomplete | Verify file counts match |

Universal error handling pattern:

import logging

logging.basicConfig(

filename='automation.log',

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

try:

result = risky_operation()

logging.info(f"Success: {result}")

except SpecificError as e:

logging.error(f"Known error: {e}")

# Handle gracefully

except Exception as e:

logging.critical(f"Unexpected: {e}")

# Alert admin

finally:

# Cleanup (close files, connections)

pass

What We Don’t Know (Honest Limitations)

Individual time savings vary significantly. McKinsey and IDC studies show aggregate productivity losses, but your specific workflow determines actual savings. File organization saves 3 hours/week for someone with 4,000 unsorted files but only 15 minutes/week for an already-organized person.

Python 3.14+ compatibility is untested for edge cases. Python 3.14 will be released in October 2025. Core libraries work, but bleeding-edge combinations remain unverified. Python 3.15 is in alpha (releases May 2026)—too early for production use.

Long-term maintenance costs are uncertain. YouTube changed their API in mid-2025, breaking pytube for months. Budget 2–4 hours per year per script for maintenance and library updates.

What we DO know with confidence:

- Python 3.10+ is stable for all scripts in this guide (verified Jan 2026)

- Quantified productivity losses from McKinsey, IDC, and IBM validate automation ROI

- Libraries with active maintainers (pytubefix, Selenium 4.x, openpyxl) will continue working

Getting Started (2026-Safe Setup)

Step 1: Verify Python version

python --version # Requires 3.10+ for modern librariesIf <3.10: Download from python.org/downloads or use your package manager.

Step 2: Create virtual environment

python -m venv automation_env

source automation_env/bin/activate # macOS/Linux

automation_env\Scripts\activate # Windows

Step 3: Install and verify

pip install --upgrade pip

pip install beautifulsoup4 requests openpyxl Pillow PyPDF2 selenium pytubefix schedule

# Test installation

python -c "import requests, bs4, openpyxl, PIL, selenium; print('✓ Ready')"

Recommended starting point: File organization (easiest, 2 hours to implement, breaks even in 2 weeks). Run manually for one week to verify correct operation before scheduling automation.

Final Thoughts: The 80/20 Rule of Automation

The best automation targets aren’t the tasks that take longest—they’re the tasks you do most frequently with predictable patterns.

High-ROI targets: File organization (daily), email responses (pattern-based), backups (high failure cost), and report generation (time cost + repetitive).

Low-ROI targets: Monthly tasks (too infrequent), creative decisions (judgment required), one-time migrations (faster manually), and frequently changing processes (maintenance exceeds savings).

McKinsey’s finding that 60% of employees could save 30% of their time through automation isn’t theoretical—it’s happening now for developers using these scripts. Start with file organization this week. Please consider adding email automation next week. By month three, you’ve recovered 50+ hours and built confidence for custom workflows.

These scripts work in 2026 because they use actively maintained libraries, avoid deprecated modules, and include failure handling. Test in virtual environments first, measure your actual savings, and iterate. Automation compounds—each script you deploy frees time to build the next one.